Argos Vision’s AI-powered traffic cameras seek to make roads safer, more efficient

Propelled by resources from the J. Orin Edson Entrepreneurship + Innovation Institute, ASU startup Argos Vision is taking their computer vision technology to the streets. The startup is developing smart traffic cameras that can passively capture, analyze and deliver droves of data to help cities improve road safety and efficiency.

It’s said that nothing is certain, except death and taxes. Let’s add a third certainty to that list: traffic.

All across the globe, traffic engineers and city planners are locked in an eternal struggle to improve the flow of traffic, the efficiency of streets and the safety of pedestrians, cyclists and drivers. Finding the best way to meet these goals requires an enormous amount of data, which is often difficult to collect and analyze.

Two Arizona State University entrepreneurs are making this data easier to understand and access. Mohammad Farhadi and Yezhou Yang founded Argos Vision, a tech startup developing smart traffic cameras that can passively capture, analyze and deliver droves of data to help cities improve road safety and efficiency.

Argos Vision emerged from Farhadi and Yang’s work as researchers in the School of Computing and Augmented Intelligence, one of the Ira A. Fulton Schools of Engineering. Yang, an assistant professor of computer science and engineering and director of the Active Perception Group, advised Farhadi as he pursued a doctorate in computer science. Farhadi earned his doctoral degree in spring 2022.

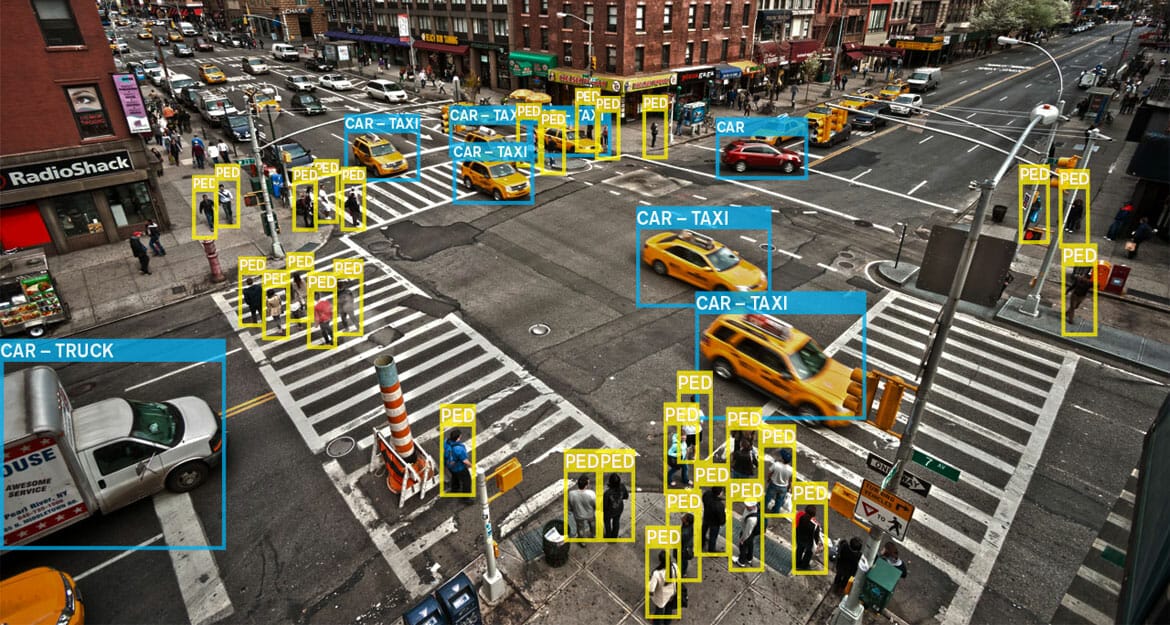

The pair created a self-contained, solar-powered traffic camera that uses on-board computer vision, a type of artificial intelligence, to identify and classify what it sees.

“We identified three major things we wanted to accomplish with this technology,” Farhadi says. “Cost reduction, privacy protection and rich metadata extraction.”

Installing traffic cameras can be costly to local governments. Closing intersections to add new power and network cable to existing infrastructure is a lengthy and expensive process. Argos Vision solves this financial roadblock with a self-contained camera system that runs off solar power and transmits data over a cellular network.

“We want to extract rich data that meets not only the minimum desire of cities, such as vehicle counting, but data that can be used in the future as well,” Farhadi says.

Named for the many-eyed giant of Greek myth, the Argos algorithm can also capture detailed contextual information, including type of vehicle, dimensions, color and markings. It can also develop a 3D model of vehicles for future reference.

Distinguishing vehicle type could be helpful for road maintenance. Roads degrade at different rates depending on their use, and understanding which vehicles use which roads at high rates may help cities better allocate resources and predict where preventative maintenance is most needed. For example, an Argos camera might observe large trucks commonly using a shortcut to access an industrial area.

“At that location, a city might elect to reinforce a road so they don’t have to replace it every year,” Farhadi says.

Despite the detailed information the Argos Vision technology collects, it does not employ any facial recognition or collect identifying information to protect the privacy of everyone on the road.

Argos extracts detailed information using a novel software framework developed by Farhadi. As the Argos cameras take images, a neural network analyzes the images’ content and distills it into its component parts. Much like how our brains can quickly distinguish what we see into separate parts — person, dog on a leash, bus stop — a neural network uses a similar process to contextualize information.

Traditionally, neural networks are computationally and power intensive, especially on small devices such as cameras. But Argos Vision’s software allows their neural network to run on low power and provide real-time traffic monitoring that collects incredibly detailed data, says Yang.